Do we need humans for user research?

There's no Turing back after this.

A recent controversy around “AI-generated users” has raised the question—do we need humans for user research? Janelle Ward wades into the debate.

A few months ago, with the release of ChatGPT, discussions about artificial intelligence became rampant on social media. AI advancements have the potential for great impact across a wide range of professional fields, with UX research being no exception.

For researchers, the posts that first emerged discussed how to (or why not to) use AI to accomplish tasks like fleshing out study design or synthesizing data from qualitative research.

Recently, however, the discussion has turned to another issue that impacts the core of our work:

Do we need actual humans to conduct user research?

The question is far from hypothetical. There are already companies marketing access to AI-generated users.

The argument goes: AI-generated users mimic the characteristics and perspectives of real users. Tech companies can purchase access to these AI-generated users and then “user test” their products without the hassle and cost of recruiting humans.

The companies offering AI-generated users sell their services as time-saving. Indeed, they would be. Participant recruitment can be one of the most time-consuming parts of conducting UX research, especially in companies that don’t have easy access to their users or haven’t had the time or resources to build a panel of representative users for testing.

But in this case? It’s not all about the money.

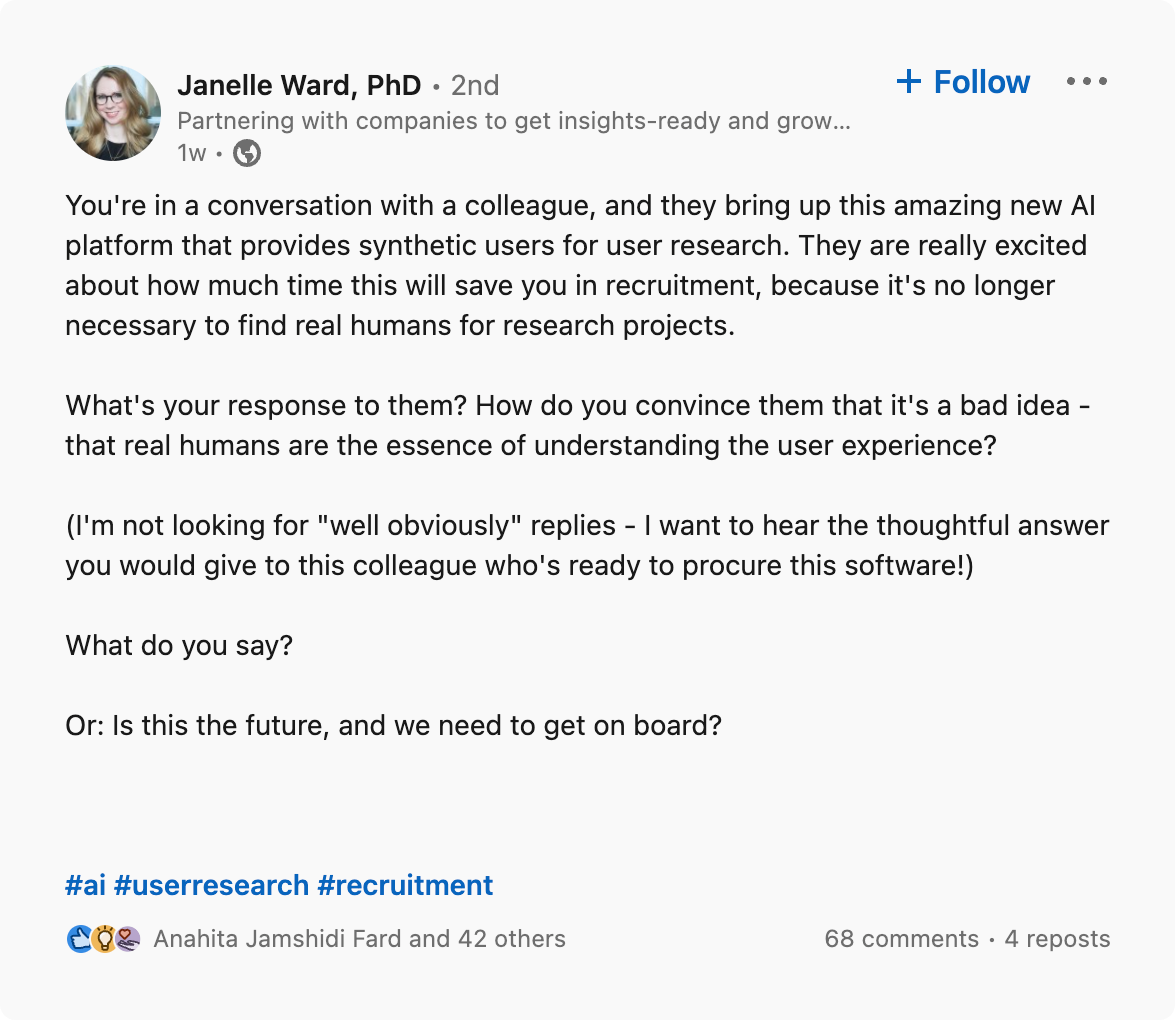

I suspected that, for researchers, the issues would extend far beyond convenience. With this in mind, I posted on LinkedIn, posing the following hypothetical scenario:

As anticipated, researchers have a lot to say on this subject. Ruben Stegbauer kicked it off with an excellent overview of issues to consider:

Ruben covered aspects related to how expert researchers approach their practice, like questioning biases and comparing attitudinal and behavioral data.

He mentioned issues related to AI as a tool, such as difficulty assessing validity. And he noted that “cheap and easy” might be a competitive disadvantage for companies that decide to take this route.

From there, research experts from various domains chimed in with a range of responses: An empirical view of a “let’s test it” approach, a rejectionist view of a (still) flawed technology, and a practical view, with a reminder that AI is not human.

The empirical view: Let’s test it

How do we really know that using AI-generated users will generate subpar results?

There are lots of reactions that tell us why. But what if we start with an empirical view?

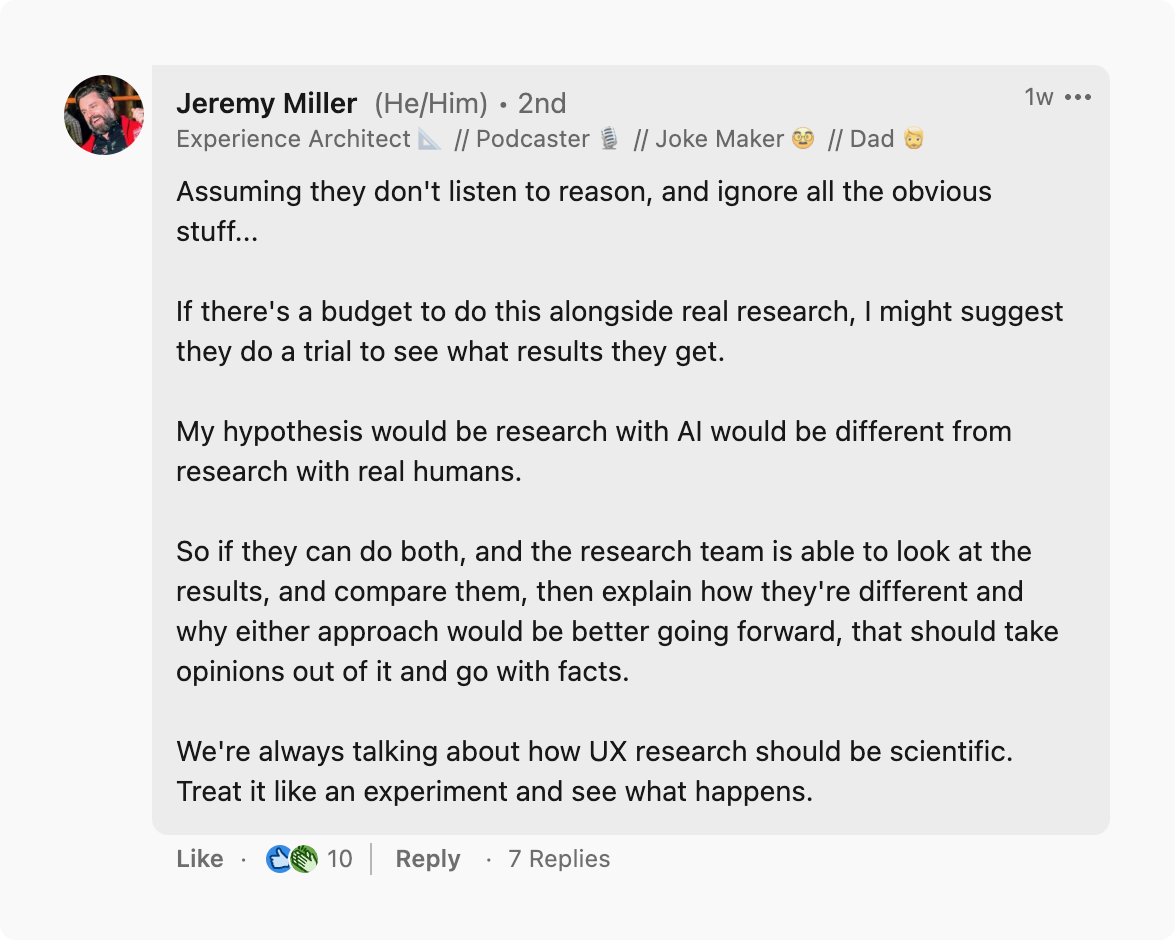

In other words—let’s test it. Jeremy Miller made this pragmatic suggestion. We do this when considering new tools in our research practices. Why not view synthetic users the same way?

Emma Huyton agreed with Jeremy’s comment:

Meghan Dove, skeptical that AI will live up to human results, posted a well-articulated reasoning for such empirical testing. In part, she said:

“An algorithm is only as good as the data it’s trained on. Demonstrate to me that you trained your algorithm with datasets that used the same rigorous, scientific approach that a good researcher does, and I’ll consider testing your solution.

Demonstrate to me that you have captured significant amounts of data from users who represent the population I’m studying, show me your ‘users’ are based on the diverse segments, backgrounds, and experience levels of the ones I currently interview, that you’re basing ‘user opinions’ on the real opinions of diverse groups, that you used real data, had enough data to recognize reliable patterns, and that you’re not putting words in the mouths of users.”

Others recoiled at testing synthetic and human participants to compare results. Jennifer Harmon, for example, replied with this:

I understand Jennifer’s point. And those adamantly opposed to introducing and potentially adopting AI-generated users into our research processes may also reject this prospect. But I do appreciate the pragmatism.

Why? Because we do not work in research bubbles. We will have non-research stakeholders who see the promise of greater speed and fewer costs and will want to consider changes to our research processes.

One way to demonstrate the potential problems with this new technology is by using our expertise—research—to show why humans rule when it comes to user research.

Alec Scharff placed the burden of proof on companies creating and marketing such products.

Perhaps they should be conducting highly rigorous studies and transparently sharing the results. He also made a valid point: “...why not skip ‘synthetic user research’ and just create a synthetic marketplace that directly predicts how well your product will sell?”

The rejectionist view: the technology is inherently/currently flawed

The pragmatic view, though worth mentioning, was somewhat overruled by the reaction of many others. A lot of researchers want nothing to do with this technology.

Many commenters made it clear that these points are essential in the discussion: AI is only as good as the data it is trained on. It may not be able to account for biases or cultural differences in its conceptualization of users or its mimicry of user attitudes. Given these flaws, how can we justify such a radical change to our research practices?

Replying to Meghan Dove, Carolyn Bufford Funk, PhD pointed out, “The average will typically miss the nuance and insight of the experts (who are not average). And a training set of the internet will necessarily exclude or under-represent (and therefore likely misrepresent) populations with no or limited internet access.”

Naomi Civins, PhD illustrated another problem well, reminding us of what we’re often seeking when we conduct research with real people:

Rosa Vieira de Almeida 卢苇 offered the perspective from content design:

Others took the ultimate rejectionist view and didn’t see the point in engaging with engagement in the discussion at all.

Jared Spool said, “No matter how much we try to convince folks, many will ignore our logic and arguments. They’ve already decided. We can burn calories on a futile cause, or we can go do other more productive things with our time.”

The practical view: Aren’t our users human?

Several commenters focused on pointing out what may seem obvious but is sometimes overlooked: We are designing products to be used by humans. Shouldn’t we then test with humans?

Aleigh Acerni provided this perspective: Are we selling to humans or AI-generated customers? She does a great job of bringing it back to the current lack of empirical evidence.

synthetic users 10

Vinicius Sasso said, “I am here wondering why are they so excited about synthetic users when their products will be used (and judged) by actual people whose behavior is totally nuanced and influenced by context, culture, habits, and personal differences. If even twin brothers behave differently from each other, where is this self-assurance that synthetic users will provide solid data for their design and development processes coming from?”

In other words, human-centered research is crucial, and human-centered research comes from conducting research with—you guessed it, humans. Thomas Wilson put it this way:

Nic Chamberlain summarized the main point, short and sweet, and a great one to remember:

It would be ignoring the elephant in the room to neglect that a co-founder for one of the companies selling AI-generated users for research was quite active in the comments.

He even asked me if I was behind a google form from a group called Researchers Against Synthetic Users (I’m not, but I’d also love to know who is!).

My advice to him, echoing several other commenters, would be this: If you’re building a tool that radically augments the work of user researchers, it’s probably best to reach out to them to better understand their problem space. Real, human user researchers.

Subscribe to Outlier

Juicy, inspiring content for product-obsessed people. Brought to you by Dovetail.