Tags

[AI]

Published

10 August 2025

Content

Roderick Obrist

Creative

Sherline Maseimilian

Share

Dovetail Chat keeps improving, making it faster, easier, and more reliable than ever to talk to your data. With agentic tool use, faster retrieval, traceable citations, cross-language search, and workspace-level tuning, Chat evolves with every release to deliver more accurate, helpful, and trustworthy answers. It’s customer intelligence that keeps getting better—so you can move faster with confidence.

Product managers unblock decisions faster, researchers synthesize interviews in minutes, and executives are empowered to make customer-centric decisions. All with the trust (and citations) to give them confidence to move quickly. Chat was designed to reduce “time to insight” and we have just made it even better!

We’ve recently re-built Dovetail Chat to use AI agents that enable customers to scale their search for answers beyond simple search summarization. Chat now has access to tools to explore our product and data on your behalf, when previously this was backed by rigid, single-flow Retrieval-Augmented Generation (RAG) look up.

This post explains how we improved our ability to reduce a customer’s time to gaining insights over 14x (22s → 1.5s), added deep-linked citations for traceability and increased confidence, enabled cross-language retrieval, and let teams steer behavior with workspace-level instructions.

Roderick Obrist is Senior AI Engineer at Dovetail.

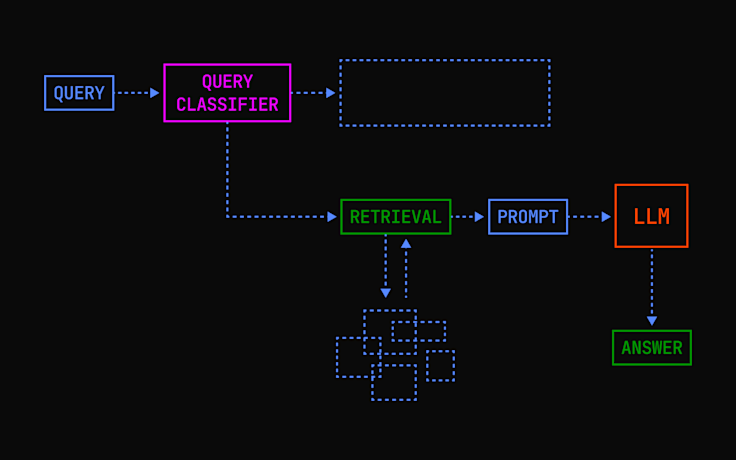

Retrieval-Augmentation Generation

Traditional RAG chatbots are designed using three separate components:

A query intent detector (or search query generator)

One or more data retrieval pipelines

A synthesis/response LLM for summarisation

A fundamental limitation of this design arises when the user poses a question that the language model doesn’t need to run a search to answer.

Consider a user asking any of the following questions

“What model are you based on?”

“How many documents do you have access to?”

“What languages do you understand?”

“Are you able to create me a report on this data?”

If these questions triggered Search queries, they would produce keywords, empty searches, and worthless responses, when the correct answer should be either instant or a clear “I do not have this capability”.

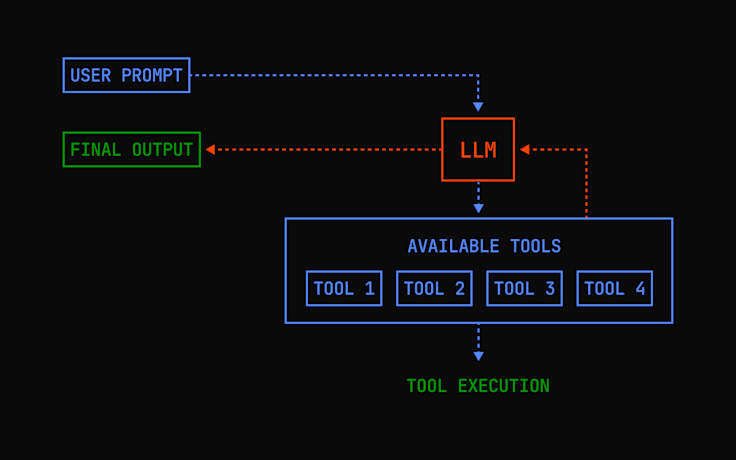

Tool use

Modern LLM systems support a paradigm called tool use. Tool use involves providing a list of capabilities to the AI and asking it to self-orchestrate the invocations. The benefits are:

The LLM is aware of all its capabilities, and by extension, its shortfalls

The LLM is not forced into a single approach to data lookup, but can choose the path it deems more effective

If the response from a certain tool is poor, it is able to try again with a different search query or tool

If the question does not require any tool invocation, the LLM can respond to the user straight away.

We’ve been working closely with customers to make agentic chat faster, more accurate, and easier to trust. Beyond broad quality upgrades, we’re shipping four major capabilities that improve speed to insight, traceability, and flexibility.

Workspace-wide indexing

Low-latency Chat responses improve how quickly customers deepen their searches by requesting clarifications, zoning in on certain parts of their project or channel, and get to insights faster. To enable this, we have rebuilt the way the chatbot accesses information.

This involved creating separate HNSW indexes for each customer’s workspace. We achieved this by using pg_vector and partitioning a single table by our customer identifier. HNSW performs graph-based ANN (Approximate nearest neighbors) search that returns the best candidates early, so results stream without scanning the full space.

With workspace indexing and code optimizations, we were able to shave retrieval latency down from 22s to 1.5 seconds. With further optimization we’ll be able to reduce this further.

Deep-linked citations

One of the most important goals for responses is to minimize hallucinations. When a customer receives a response that was aggregated across a workspace, they need to trust the insights provided and easily access the source material that was used to generate it.

Deep-linked citations are powered by a deeper understanding of which inputs an LLM uses to generate its output. When we attach source documents to a request, the model returns answers with per-span references—document index/title plus exact locations (character ranges for text). Because we’ve built this as a first-class feature (not a prompt trick) we can guarantee valid pointers to the provided docs, making it trustworthy by design.

Multi-language support

Telling a chatbot to simply respond in the language that a user is speaking to it is simple. The challenge Dovetail tried to solve in enabling multi-language support was that our chat needed to perform keyword search in the language of the document base.

A user can now:

Upload a French-language cookbook

Ask “Gaano karaming mantikilya ang kailangan sa paggawa ng croissant?” (“How much butter goes in a croissant?” in Tagalog)

Chat will conduct a keyword search of burre croissant (butter croissant in French)

Read the keyword matches in French and respond in Tagalog

Workspace-level instructions

With workspace-level instructions, you can now customize how Chat behaves across your entire workspace. Think of it as setting the ground rules—whether that’s asking Chat to speak in an executive-friendly tone, prioritize product feedback over customer sentiments, or focus on a specific part of your product. These instructions act like a guiding compass, helping Chat understand what matters most to your team and tailoring its responses accordingly. This is currently available to a limited number of trial enterprise customers, but we plan to expand access soon.

Does chat not respond to you in your preferred tone?

Do you find that it focuses on customer sentiments rather than exclusively on feature requests?

Does it not understand what is unique to your workspace and its data?

Now you can guide Chat to respond the way you want—just tell it how.

Dovetail Chat keeps improving, making it faster, easier, and more reliable than ever to talk to your data. With agentic tool use, faster retrieval, traceable citations, cross-language search, and workspace-level tuning, Chat evolves with every release to deliver more accurate, helpful, and trustworthy answers. It’s customer intelligence that keeps getting better—so you can move faster with confidence.

Haven’t tried Dovetail Chat yet? Get started today or request a demo from our sales team.

Related Articles