Context is key: the story behind how we enhanced our Channels onboarding and AI-powered topics

Tags

[ai]

Published

6 July 2025

Content

Jessica Pang, Tessa Marano

Creative

Jessica Pang

Share

Dovetail’s Lead Product Designer, Jess Pang, shares how she and the team evolved Channels’ topics model and improved theme relevancy, with three new feature updates: a simplified onboarding flow, context prompting, and topic generation based on customer prompt and data.

I’m Jess, the Lead Product Designer on Channels, and I've been working closely with the team to address what we’ve been hearing from customers and continuously improve the Channels experience for you.

What is Channels?

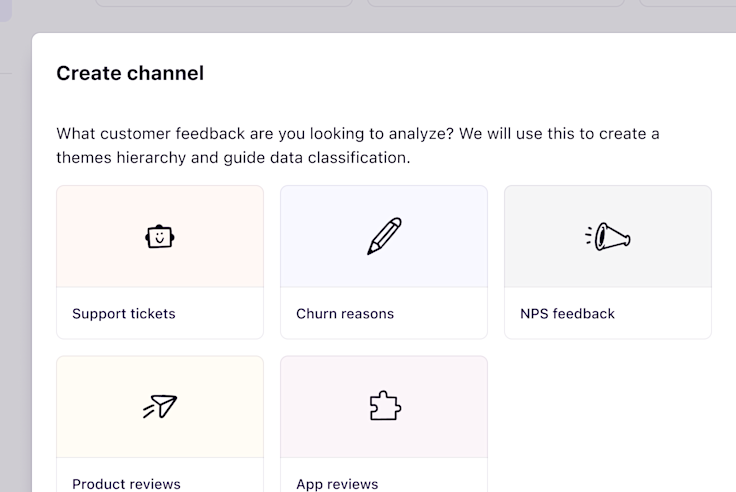

Channels transforms large amounts of continuous customer feedback—support tickets, app reviews, product feedback, and more—into actionable insights to help product teams prioritize what to build next and make informed decisions.

In this blog post, I'll dive into our thinking behind enhanced topics, a more streamlined onboarding and the ability to prompt more relevant classification.

Where it started with topics

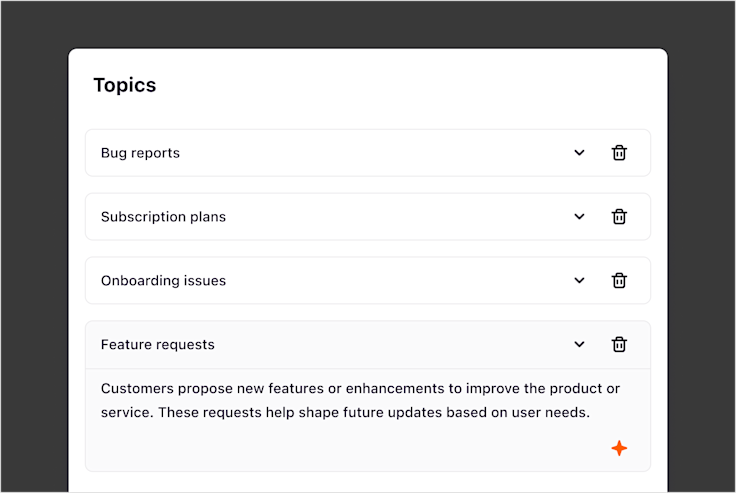

Continuous theme improvements have been a top priority for us. Earlier this year, we shipped topics, a new layer of structure that sits above themes to help customers better organize and make sense of feedback. Going beyond filters, topics group related themes and surface broader patterns.

At the time of release, our hypothesis was that each feedback type would share its own set of common topics. For example, we pre-defined topics like Feature requests and Technical issues for support tickets whereas we had Pricing and Missing features for churn reason analysis. It was the right direction, but in practice, these categories often ended up being either too broad or not quite relevant.

Some of the challenges we heard were:

Customers were unsure how to use pre-defined topics as the pre-defined ones were not relevant for their context.

Also evident from our in-app product feedback, customers were either seeing duplicate themes across topics, or different topics with similar wording.

Our metrics were also telling us that there were more topics deletion than creation, confirming that the pre-defined topics were creating noise rather than being helpful.

Why context matters

The missing link in topic relevancy was context—something we’ve had a growing amount of feedback about. Customers wanted to share their business context to yield more relevant and accurate results.

During Insight Out 2025—Dovetail’s global conference for product teams—we workshopped with some of our customers to understand what context means to them on a workspace level, using this visual prompt I mocked up to start the discussion.

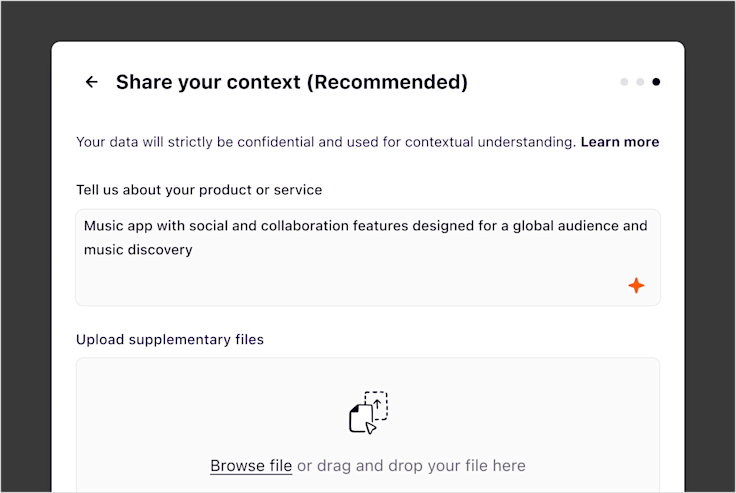

Some mentioned sharing context on their business model and competitors they wanted to monitor, whereas others wanted to upload glossary files and documents.

We explored how we could start by bringing this into Channels.

Simplifying onboarding

Previously when creating a channel, we ask what feedback are you looking to analyze to help guide the LLM on topic creation and theme generation.

But with more direct integrations, we can now infer that from the integration source—support tickets for Intercom, app reviews for Google Play, or product reviews for Pendo.

So there’s no really no need to ask.

You might ask then — but what about CSV uploads?

We can solve that through prompting instead, which is way more valuable than a static response.

Alternatively, if you want to leave it to the LLM, we will suggest topics based on your dataset, and you can always add context later.

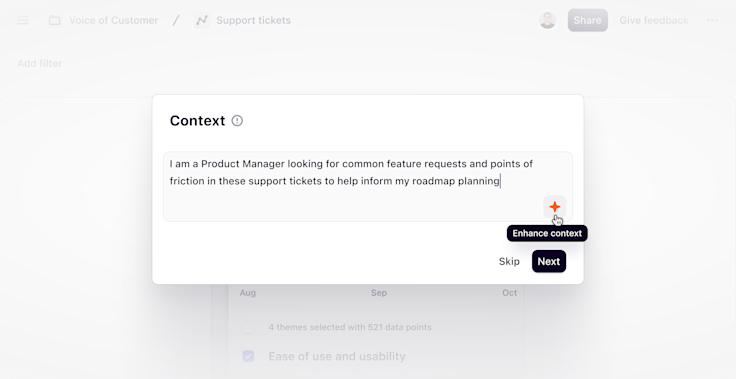

With this, we decided to scrap that previous step entirely and introduce the Context step after configuring your integration source.

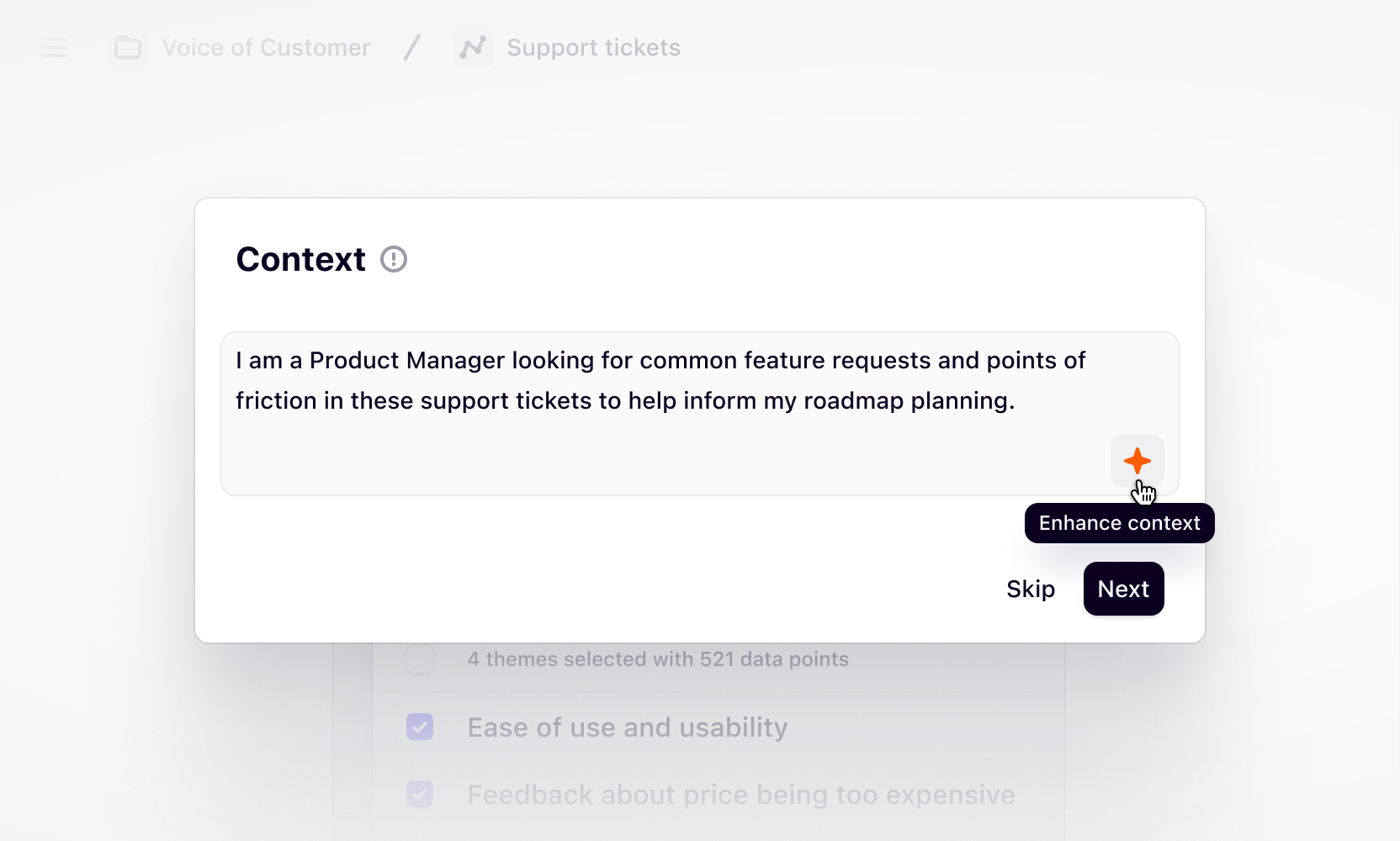

Suggesting topics from your prompt

The magic of generative AI is in those aha moments when it surfaces insights you hadn’t even considered.

For instance, I was prompting for feature ideas to improve the Channels experience, and have always been impressed with how far it can go with just a two-sentence prompt.

Similarly for topics, by prompting even a few words of context, we were able to see improvements in relevance.

Based on the suggested list of topics, you can edit, delete, or create new ones - it’s your canvas!

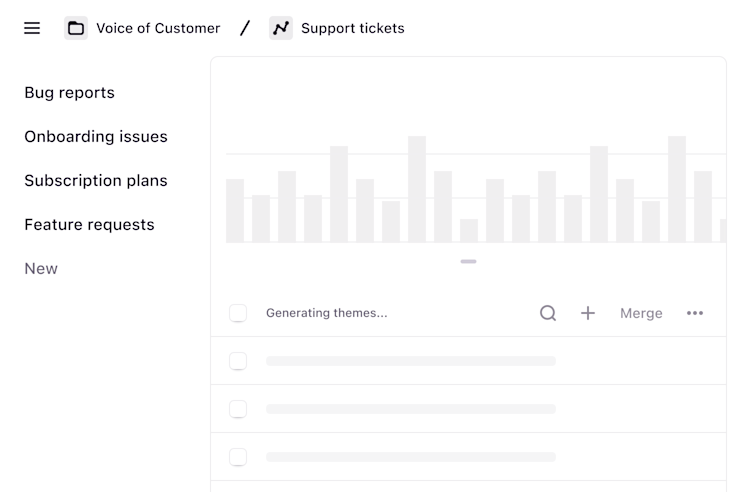

Generating topics based on your data

Now, instead of pre-defining topics, we generate topics based on your context prompt and dataset.

That means no more random or overlapping topics—at least, not in the obvious ways. But it’s not as clear-cut as it sounds... read on ;)

What we learned

Is it a duplicate or just similar?

Natural language is inherently subjective and often ambiguous. The way people describe things can vary depending on their role, what they’re trying to get out of the analysis, or how detailed they expect the output to be.

Take Easy to use interface and Intuitive navigation as an example — you could argue that they are different topics, but someone else might consider them as overlapping topics that are just worded differently.

One way we evaluate topics is by checking if they’re near synonyms, but even that can be subjective. This is why customer feedback is so important to us and we continue to iterate on topics and theme quality, which leads me to my next point.

Testing can be tricky

Before shipping, we had several rounds of testing internally to test with different datasets and evaluated them against criterias such as duplicate, hallucination rate and more.

The biggest challenge designing and building an AI-native product has been testing with a variety of datasets, so this is a shoutout to all the customers who shared their time and feedback with us — Achievers, Zapier, Lema, Optio, Resim, Okta, America Test Kitchen, Kone Insights Hub, Visibuild, CDP, CircleK and more!

And there you have it! Have a play around with improved topics and let us know your thoughts on everything we’ve shipped:

A simplified onboarding flow

Context prompting

Topics generation from your context prompt and data

Haven't tried Channels yet? Get started today or request a demo from our sales team.

Related Articles