Short on time? Get an AI generated summary of this article instead

You can only deliver an exceptional customer experience if you deeply understand your customers. Guesswork won’t be enough. You must learn exactly what your customers want, what their pain points are, and what truly delights them.

Surveys are an effective way to get to know your customers, but writing the right questions to uncover essential insights can be challenging.

This guide will help you put together the right survey questions to find those essential data points, helping you deliver on the promise of exceptional customer experience.

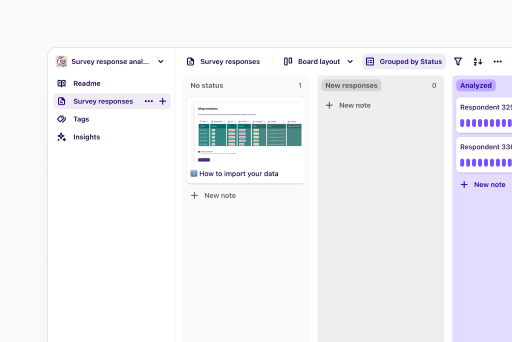

Free template to analyze your survey results

Analyze your survey results in a way that's easy to digest for your clients, colleagues or users.

Use template

What makes a good survey question?

Before diving into the most helpful types of survey questions, it’s useful to consider what makes a survey question effective.

Consider the following aspects when creating your survey:

Timing

Questions asked at the right time will feel much more relevant to the customer. For example, asking a customer how they feel right after an interaction with your business rather than a few days later will ensure you receive feedback that accurately represents their experience.

Relevance

Asking the right questions to the right customers is also important. This might mean segmenting customers based on their attributes, purchasing history, or customer category to ensure you only send surveys that are relevant to them.

Format

It’s also helpful to consider whether a pop-up, on-page, email, or phone survey makes the most sense depending on the situation, questions, and customer.

Wording

Asking the wrong question could lead your team in the wrong direction. The wrong wording could also confuse or even offend your respondents. Clarity, sensitivity, and accuracy are all important when it comes to getting the wording right.

Purpose

The purpose of the data collection and your analysis process will dictate the types of questions you ask. For example, for more quantitative analysis, you’ll want to keep answers close-ended (like multiple choice and true or force) so that you can better visualize and handle the data.

You could also survey a handful of participants and leave the questions open to understand their motivations and experiences on a deeper level.

12 core survey question types (with examples)

To learn more about your customers, you’ll need to consider different types of survey questions and the advantages each one offers.

We’ve rounded up 12 question types to provide useful survey question examples for your next project.

1. Open-ended questions

Open-ended questions don’t have defined answers. This means you hear what your customers have to say in their own words.

These questions allow participants to give more detailed and informed responses. You might find they offer unprompted revelations and information, unlocking interesting insights that you wouldn’t uncover through more standard responses.

Asking this type of question gives you the chance to discover your customer’s needs, wants, and pain points that you and your team perhaps weren’t aware of.

Bear in mind that open-ended questions can be challenging to quantify, and the analysis process takes longer. However, you can label and group them into topics to gain overall sentiment and useful takeaways. You might decide to run more quantifiable surveys during or afterward to validate sentiments with more statistical data.

Examples of open-ended questions include the following:

What feelings did you have when leaving your bank details in our portal?

What goal do you hope to achieve by downloading our app? Why do you need to accomplish this?

What makes you trust our company more than the others?

2. Multiple choice questions

Multiple choice questions ask survey respondents to choose an answer from a predefined list that they feel suits them best. These types of questions can help your team discover sentiment quickly. They are also easy to analyze and extrapolate data from.

The downside of multiple choice is that respondents may feel swayed to check an item that doesn’t really represent their feelings. Multiple choice also limits what a participant can reveal, given they don’t have an opportunity to expand on their answers.

Here’s an example of a multiple-choice question:

How easy (or challenging) do you find our product to use?

Very easy

Quite easy

Quite challenging

Very challenging

3. Rating scales

Asking your customers to rank your business, a feature, or their feelings on a scale can help you gain insights about your offering quickly and easily.

While rating scales lack nuance and deeper insights, you can use them to quickly discover overall sentiment toward your company, product, or service.

The net promoter score (NPS) is a well-known example of a rating scale. Organizations use the NPS to assess customer loyalty and satisfaction.

The score asks respondents to give a rating from 0 (not at all likely) to 10 (extremely likely) in answer to the following question:

How likely is it that you would recommend [product/service/company] to a friend or colleague?

4. Likert scales

Likert scales are a specific type of rating scale. They include a range of answers that express an opinion, attitude, or belief. This differs from general rating scales, which often gather numeric answers.

Typically, Likert scales are used to help teams understand customer attitudes. As such, they can be used to improve an organization’s offering.

Here’s an example of a Likert scale:

How satisfied are you with our [product/service]?

Very dissatisfied

Dissatisfied

Neutral

Satisfied

Very satisfied

5. Dropdown questions

With predefined answers, dropdown questions require respondents to select an answer from a dropdown menu. The dropdown menu displays a list of potential answers from which participants can choose the one they identify with most.

The use of dropdown questions can ensure a survey is less cluttered and simpler to navigate. Given the answers are predefined, they can be simple to analyze and draw insights from.

However, this question type doesn’t allow respondents to elaborate or provide feedback in their own words. Additionally, options that are higher up the list are more likely to be chosen.

It’s important to know when to use dropdowns and shuffle responses to each user if possible.

One final tip for using dropdown questions: expansive dropdown lists should be searchable and easy to navigate.

Below is an example of a dropdown question:

Select the product you’re most likely to buy in the next month from the list below:

[Dropdown Menu]

Jackets & coats

Tops

Dresses

Jeans & trousers

Shirts

Watches

Belts

6. Demographic questions

Demographic questions can be used to categorize and better understand your customers, revealing more about their personal attributes.

These questions can be useful in showing you how to deliver personalized experiences to your customers. They can also help you understand a customer’s background, wants, needs, and personal interests.

However, caution should be applied to these question types. Don’t bring unconscious bias into product development or make assumptions based on demographic information.

Demographics include the following:

Age

Gender

Ethnicity

Occupation

Marital status

Income bracket

Geographic location

Here’s an example of a demographic question:

What is your gender?

Female

Male

Non-binary

Prefer not to say

7. Ranking questions

Ranking questions ask participants to order predetermined items. This typically involves a numbered list where the most preferred option is one, the second most preferred option is two, and so on.

Ranking questions can help teams understand their customer’s preferences, and extracting data from them can be quick. However, a downside is that they lack nuance, as they don’t tell you why participants prefer certain list items over others.

Here’s an example of a ranking question:

Rank these new product features for our design interface (1 being the most preferred and 5 being the least):

Drag and drop options

More color selections

AI capabilities

Design templates

Stock images

8. Image choice questions

Surveys with image choice questions differ from others in that the answers are not numerical or text-based; instead, they are visual images. These survey types can be particularly helpful for understanding which visual elements—including colors, layouts, and fonts—are more appealing to your customers.

Image choice questions can also help your team understand which design elements are more likely to result in purchases.

Here’s an example of an image choice question:

Which of these four logos is more visually appealing to you?

The answer would have four different logo designs to choose from.

9. Click map questions

Surveys with click map questions are also image-based; however, they require respondents to interact with a specific part of an image.

An image is displayed to a respondent—this could be a design, map, chart, or product layout—and they are then asked to click on specific parts of that image in response to a question.

In user experience design, click maps can help teams discover where users are drawn to in visual images, enabling them to optimize web pages, products, and features.

Here’s an example of a click map question:

Please select the areas of the website homepage that you find most visually appealing:

The survey would display a homepage that the users can click on, and the clicks would be recorded.

10. File upload questions

Surveys sometimes include file upload questions to collect supporting documentation. This enables the participant to provide images, reports, diary entries, or screenshots.

This question style can be particularly helpful in diary studies where participants are asked to write down their experiences over a period of time. It can also be useful when users are asked to provide information about issues they may be having. In this case, they might upload a screenshot of a website issue.

File uploads can be particularly helpful to quickly understand what a participant is referring to. However, uploads require extra effort, so fewer participants will complete these questions. The more work, or perceived work, a participant needs to do, the less responses or quality you can expect from the results.

Below is an example of a file upload question:

Have you experienced any issues with our application in the past week? Please provide images/screenshots/files to describe those issues.

11. Slider questions

Slider questions are often used to assess a participant’s satisfaction or feelings toward a product or service. Questions are presented alongside a sliding scale the user can move up and down.

The slider can represent quantities and function as a more visual way to provide a number. Alternatively, they can represent feelings such as “strongly disagree” to “strongly agree.”

Like other scale-based surveys, the results of slider questions can be simple for teams to quantify. Sliders work particularly well if you know your users are more likely to be on a touchscreen when they respond to your survey.

Be careful with usability, as some screens or devices may make responding more difficult if the scale of your slider isn’t clear or easy to use.

Here’s an example of a slider question:

How satisfied are you with our [x] product?

A slider would then display a scale of “not satisfied” to “very satisfied.”

12. Benchmarkable questions

One of the most challenging aspects of conducting customer surveys can be accurately analyzing that data and drawing helpful insights. Benchmakable questions can help. Answers to benchmarkable questions are typically standardized so that they can be added, compared, and analyzed easily.

Customer satisfaction scores, employee engagement scores, and NPS questions are all types of benchmarkable questions.

Here’s a specific example of a benchmarkable question:

On a scale of 1 to 10, how satisfied are you with our customer service?

Dos and don’ts of writing great survey questions

Once you have decided which question type(s) suit your situation, customers, and survey goals best, you need to consider which questions you will ask.

The way you ask questions is critical. Word a question incorrectly and you could confuse your respondents or receive inaccurate insights. The combination of questions and being polite to participants are also important factors.

Some “dos” for writing good survey questions include the following:

DO be polite and concise: get the best out of your respondents by speaking politely to them (use “please” and “thank you”) and not taking too much of their time. The most helpful questions are typically just one sentence. Having said that, don’t skip on essential details.

DO alternate your questions often: endless similar questions can be taxing for respondents, whether they’re open-ended, multiple choice, or rating scales. Mixing question types can keep surveys more interesting for participants, boosting engagement and completion rates.

DO consider the foot-in-the-door principle: participants who agree to initial small requests are more likely to agree to larger requests down the line. This is known as the foot-in-the-door principle. If your respondents have completed smaller surveys, they are suitable candidates for sending a follow-up survey with longer or more in-depth questions.

DO ask questions from the first-person perspective: writing from the first-person perspective can help make surveys feel more personalized and unique to respondents.

DO test your surveys first: imagine having high engagement rates in a survey only to realize the answers aren’t being properly collated or a dropdown menu is broken. Test your surveys thoroughly before sending them to save time for you and your respondents.

Here are some “don’ts” for writing good survey questions:

DON’T ask too many questions: avoid survey fatigue by keeping your surveys concise. If your surveys are too long, you’ll likely reduce completion rates, get poorer quality responses, and run the risk of frustrating your customers.

DON’T ask leading questions: leading questions include a kind of bias or implication. This can mean respondents are subtly or unsubtly persuaded to give a particular answer. The question may include presumptions, influential content, or implied information that impacts how participants respond.

DON’T ask loaded questions: loaded questions can cause bias. They typically involve emotive or suggestive language that can trigger a particular response. Loaded questions can be manipulative and designed to trap a person. Using neutral language and avoiding assumptions in questions can help you avoid loaded questions.

DON’T ask about more than one topic at once: asking about multiple topics at the same time can get confusing for the participant. To get the best (and most accurate) results, keep things simple and focus on one topic at a time.

Survey use cases: what you can do with good survey questions

Good survey questions can help teams deeply understand their customers and design better, more useful products. Survey results have many essential use cases, including the following:

1. Creating user personas

User personas help you truly resonate with customers and design more specifically for them rather than creating products that are too general. Survey data can help you build out accurate user personas with more reliable information.

2. Understanding your product and its metrics

When you understand your customer’s pain points and frustrations, it colors all the quantitative data you check regularly. For example, low engagement rates will make sense if you know half of your users get lost at a particular step.

The benefits don’t stop there. Knowing your users well doesn’t just explain what’s currently happening and why; it can ensure you develop better, more satisfying products based on customer opinions—not assumptions.

3. Understanding why people leave your website

You may be seeing high dropoff rates on your website but have no idea why. A survey may help reveal that there’s too much friction on your site or that the layout is too confusing. Without gaining that feedback from customers, you may never know why things aren’t working.

4. Informing your pricing strategy

Surveys, along with other key data, can help your team gain insights into your pricing and whether it works for your customers. This may help you revise pricing and optimize the strategy for higher conversion rates and boosted profits.

5. Measure and understand product–market fit

Before you launch a product, you need to know whether there’s market demand for it and whether your product will fit. Deep research via surveys can help ensure that when you do launch, you do so successfully to a ready and willing market.

6. Choose effective testimonials

Testimonials from customers can help build trust with your brand, ensuring more people feel comfortable purchasing from your organization. Verbatim comments in surveys can be fantastic sources of testimonials.

Keep in mind that it’s always important to get permission before using comments from your participants in promotional materials.

Survey questions for deeper insights

To provide a truly customer-centric experience, you need to get into your customers’ minds. Surveys are one of the most helpful ways to do this.

Sending surveys to the right people at the right time gives you the chance to get deep insights you can act on to continually optimize your offering.

FAQs

How many questions should I include in a survey?

The right number of questions to include in a survey will depend on the type of survey, the participants being asked, the incentive you’re offering, and the context. Typically, though, the best surveys are brief. Some surveys might even contain just one question.

As a guideline, online surveys usually involve between 15–20 questions. This will ensure you don’t overwhelm participants but will still allow you to draw accurate and helpful insights from the answers.

How do I get answers to my survey?

Finding participants for your surveys can be tricky. Sending your surveys to current customers can be a great way to get specific and relevant answers.

If you don’t have current customers, you can advertise your surveys on social media, send them to your email list, or approach an agency that can supply participants in your target market.

To get people interested, consider providing incentives. This can help boost engagement rates.

How to write a good survey?

A good survey will depend entirely on your team’s goals, the people being surveyed, and the context of the product or service.

As a guide, good survey questions are typically clear and concise without leading or loaded statements. They don’t overwhelm participants with too many questions and stay on topic to avoid confusion.

What is the best response scale for a survey?

The best response scale for a survey will depend on the survey itself, the questions being asked, and the overall goal.

For response scales, 1–10 is often used as it’s easy to convert to percentages. This scale also offers enough options for respondents to share their views. The most important thing is choosing a response scale that’s relevant to the project at hand.

Should you be using a customer insights hub?

Do you want to discover previous survey findings faster?

Do you share your survey findings with others?

Do you analyze survey data?